|

人工智能縱橫談 – 開欄文

人工智能縱橫談 – 開欄文

|

瀏覽6,328 |回應24 |回應24 |推薦2 |推薦2 |

|

|

|

四月開始,由於 ChatGPT 和 Bing Chat 的上線,網上以及各line群組掀起一陣AI瘋。我當時大概忙於討論《我們的反戰聲明》,沒有湊這個熱鬧。現在轉載幾篇相關文章。也請參考《「人工智慧」研發現況及展望 》一文。

有些人擔憂「人工智慧」會成為「人上機器」,操控世界甚至奴役人類。我不懂AI,思考也單純;所以,如果「人工智慧」亂了套,我自認為有一個簡單治它的方法:

拔掉電源插頭。如果這個方法不夠力,炸掉電力傳輸線和緊急發電機;再不行,炸掉發電廠。

本文於 修改第 4 次

|

人類和人工智慧之戰的結果 -- Stephen Kelly

人類和人工智慧之戰的結果 -- Stephen Kelly

|

|

|

推薦1 |

|

|

|

Here's how a war between AI and humanity would actually end

There’s no need to worry about a robot uprising. We can always just pull the plug, right…? RIGHT?

Stephen Kelly, the Science Focus, 09/29/23

New science-fiction movie The Creator imagines a future in which humanity is at war with artificial intelligence (AI). Hardly a novel concept for sci-fi, but the key difference here – as opposed to, say, The Terminator – is that it arrives at a time when the prospect is starting to feel more like science fact than fiction.

The last few months, for instance, have seen numerous warnings about the ‘existential threat’ posed by AI. For not only could it one day write this column better than I can (unlikely, I’m sure you’ll agree), but it could also lead to frightening developments in warfare – developments that could spiral out of control.

The most obvious concern is a future in which AI is used to autonomously operate weaponry in place of humans. Paul Scharre, author of Four Battlegrounds: Power in the Age of Artificial Intelligence, and vice president of the Center for a New American Security, cites the recent example of DARPA’s (the Defense Advanced Research Projects Agency) AlphaDogfight challenge – an aerial simulator that pitted a human pilot against an AI.

“Not only did the AI crush the pilot 15 to zero,” says Scharre, “but it made moves that humans can’t make; specifically, very high-precision, split-second gunshots.”

Yet the prospect of giving AI the power to make life or death decisions raises uncomfortable questions. For instance, what would happen if an AI made a mistake and accidentally killed a civilian? “That would be a war crime,” says Scharre. “And the difficulty is that there might not be anyone to hold accountable.”

In the near future, however, the most likely use of AI in warfare will be in tactics and analysis. “AI can help process information better and make militaries more efficient,” says Scharre.

“I think militaries are going to feel compelled to turn over more and more decision-making to AI, because the military is a ruthlessly competitive environment.

If there’s an advantage to be gained, and your adversary takes it and you don’t, you’re at a huge disadvantage.” This, says Scharre, could lead to an AI arms race, akin to the one for nuclear weapons.

“Some Chinese scholars have hypothesised about a singularity on the battlefield,” he says. “[That’s the] point when the pace of AI-driven decision-making eclipses the speed of a human’s ability to understand and humans effectively have to turn over the keys to autonomous systems to make decisions on the battlefield.”

Of course, in such a scenario, it doesn’t feel impossible for us to lose control of that AI – or even for it to turn against us. Hence why it’s US policy that humans are always in the loop regarding any decision to use nuclear weapons.

“But we haven’t seen anything similar from countries like Russia and China,” says Scharre. “So, it’s an area where there’s valid concern.” If the worst was to happen, and an AI did declare war, Scharre is not optimistic about our chances.

“I mean, could chimpanzees win a war against humans?” he says, laughing. “Top chessplaying AIs aren’t just as good as grandmasters; the top grandmasters can’t remotely compete with them. And that happened pretty quickly.

It’s only five years ago that that wasn’t the case. “We’re building increasingly powerful AI systems that we don’t understand and can’t control, and are deploying them in the real world. I think if we’re actually able to build machines that are smarter than us, then we’ll have a lot of problems.”

About our expert, Paul Scharre

Scharre is the Executive Vice President and Director of studies at the Center for a New Amercian Security (CNAS). He has written multiple books on the topic of artificial intelligence and warfare and was named one of the 100 most influential people in AI in 2023 by TIME magazine.

Read more:

* The end of ageing? A new AI is developing drugs to fight your biological clock

* An AI friend will always be on your side... until it threatens your free will

本文於 修改第 1 次

|

人類可能在2031面臨「終結時刻」 -- Tim Newcomb

人類可能在2031面臨「終結時刻」 -- Tim Newcomb

|

|

|

推薦1 |

|

|

|

「終結時刻」:人工智慧機器脫離人類控制,取得自主能力的時間點。

A Scientist Says the Singularity Will Happen by 2031

Maybe even sooner. Are you ready?

Tim Newcomb, 11/09/23

* “The singularity,” the moment where AI is no longer under human control, is less than a decade away—according to one AI expert.

* More resources than ever are being poured into the pursuit of artificial general intelligence and speeding the growth of AI.

* Development of AI is also coming from a variety of sectors, pushing the technology forward faster than ever before.

There’s at least one expert who believes that “the singularity”—the moment when artificial intelligence surpasses the control of humans—could be just a few years away. That’s a lot shorter than current predictions regarding the timeline of AI dominance, especially considering that AI dominance is not exactly guaranteed in the first place.

Ben Goertzel, CEO of SingularityNET—who holds a Ph.D. from Temple University and has worked as a leader of Humanity+ and the Artificial General Intelligence Society—told Decrypt that he believes artificial general intelligence (AGI) is three to eight years away. AGI is the term for AI that can truly perform tasks just as well has humans, and it’s a prerequisite for the singularity soon following.

Whether you believe him or not, there’s no sign of the AI push slowing down any time soon. Large language models from the likes of Meta and OpenAI, along with the AGI focus of Elon Musk’s xAI, are all pushing hard towards growing AI.

“These systems have greatly increased the enthusiasm of the world for AGI,” Goertzel told Decrypt, “so you’ll have more resources, both money and just human energy—more smart young people want to plunge into work and working on AGI.”

When the concept of AI started first emerged—as early as the 1950s—Goertzel says that its development was driven by the United States military and seen primarily as a potential national defense tool. Recently, however, progress in the field has been propelled by a variety of drivers with a variety of motives. “Now the ‘why’ is making money for companies,” he says, “but also interestingly, for artists or musicians, it gives you cool tools to play with.”

Getting to the singularity, though, will require a significant leap from the current point of AI development. While today’s AI typically focuses on specific tasks, the push towards AGI is intended to give the technology a more human-like understanding of the world and open up its abilities. As AI continues to broaden its understanding, it steadily moves closer to AGI—which some say is just one step away from the singularity.

The technology isn’t there yet, and some experts caution we are truly a lot further from it than we think—if we get there at all. But the quest is underway regardless. Musk, for example, created xAI in the summer of 2023 and just recently launched the chatbot Grok to “assist humanity in its quest for understanding and knowledge,” according to Reuters. Musk also called AI “the most disruptive force in history.”

With many of the most influential tech giants—Google, Meta and Musk—pursuing the advancement of AI, the rise of AGI may be closer than it appears. Only time will tell if we will get there, and if the singularity will follow.

本文於 修改第 1 次

|

人工智慧發展小史 - Donovan Johnson

人工智慧發展小史 - Donovan Johnson

|

|

|

推薦1 |

|

|

|

以下短文只是小菜一碟;但對科普或技普有興趣的朋友,此部落格可以不時去蹓躂、蹓躂。例如下面這三碟小菜:

The Difference Between Generative AI And Traditional AI: An Easy Explanation For Anyone

How Pulsed Laser Deposition Systems are Revolutionizing the Tech Industry

Top Data Annotation Tools to Watch: Revolutionizing the Telecommunications and Internet Industries

The History of Artificial Intelligence

Donovan Johnson, 07/23/23

Artificial intelligence (AI) has a long history that dates back to ancient times. The idea of machines or devices that can imitate human behavior and intelligence has intrigued humans for centuries. However, the field of AI as we know it today began to take shape in the mid-20th century.

During World War II, researchers began to explore the possibilities of creating machines that could simulate human thinking and problem-solving. The concept of AI was formalized in 1956 when a group of researchers organized the Dartmouth Conference, where they discussed the potential of creating intelligent machines.

In the following years, AI research experienced significant advancements. Researchers developed algorithms and programming languages that could facilitate machine learning and problem-solving. They also started to build computers and software systems that could perform tasks traditionally associated with human intelligence.

One of the key milestones in AI history was the development of expert systems in the 1980s. These systems were designed to mimic the decision-making processes of human experts in specific domains. They proved to be useful in areas such as medicine and finance.

In the 1990s, AI research shifted towards probabilistic reasoning and machine learning. Scientists began to explore the potential of neural networks and genetic algorithms to create intelligent systems capable of learning from data and improving their performance over time.

Today, AI has become an integral part of our daily lives. It powers virtual assistants, recommendation systems, autonomous vehicles, and many other applications. AI continues to evolve and advance, with ongoing research in areas such as deep learning, natural language processing, and computer vision.

The history of AI is characterized by significant achievements and breakthroughs. From its early beginnings as a concept to its current status as a transformative technology, AI has come a long way. As researchers and scientists continue to push the boundaries of what is possible, we can expect even more exciting developments and applications of AI in the future.

本文於 修改第 2 次

|

「人工智慧」並不智慧 ----- David J. Gunkel

「人工智慧」並不智慧 ----- David J. Gunkel

|

|

|

推薦2 |

|

|

|

剛口教授在這篇文章中討論「人工智慧」是不是一個正確的「指號」。他的論點涉及語意學和語言哲學兩個領域概念多於科學和技術兩個領域。

剛口教授認為魏勒教授提出的「控制及通訊機制學」要比「人工智慧」來得貼切、適當。如剛口教授所說,前者不會讓人們聯想到「智慧」、「意識」、和「感知」這類其實跟「人工智慧」並無關聯的概念。

AI is not intelligent

AI should not be called AI

David J. Gunkel, 06/22/23

The act of naming is more than just a simple labeling exercise; it's a potent exercise of power with political implications. As the discourse around AI intensifies, it may be time to reassess its nomenclature and inherent biases, writes David Gunkel.

Naming is anything but a nominal operation. Nowhere is this more evident and clearly on display than in recent debates about the moniker “artificial intelligence” (AI). Right now, in fact, it appears that AI—the technology and the scientific discipline that concerns this technology—is going through a kind of identity crisis, as leading voices in the field are beginning to ask whether the name is (and maybe already was) a misnomer and a significant obstacle to accurate understanding. “As a computer scientist,” Jaron Lanier recently wrote in a piece for The New Yorker, “I don’t like the term A.I. In fact, I think it’s misleading—maybe even a little dangerous.”

What’s in a Name?

The term “artificial intelligence” was originally proposed and put into circulation by John McCarthy in the process of organizing a scientific meeting at Dartmouth College in the summer of 1956. And it immediately had traction. It not only was successful for securing research funding for the event at Dartmouth but quickly became the nom célèbre for a brand-new scientific discipline.

For better or worse, McCarthy’s neologism put the emphasis on intelligence. And it is because of this that we now find ourselves discussing and debating questions like: Can machines think? (Alan Turing’s initial query), are large language models sentient? (something that became salient with the Lemoine affair last June), or when might have an AI that achieves consciousness (a question that has been posed in numerous headlines in the wake of recent innovations with generative algorithms). But for many researchers, scholars, and developers these are not just the wrong questions, they are potentially deceptive and even dangerous to the extent that they distract us with speculative matters that are more science fiction than science fact.

Renaming AI

Since the difficulty derives from the very name “artificial intelligence,” one solution has been to select or fabricate a better or more accurate signifier. The science fiction writer Ted Chiang, for instance, recommends that we replace AI with something less “sexy,” like “applied statistics.” Others, like Emily Bender, have encouraged the use of the acronym SALAMI (Systematic Approaches to Learning Algorithms and Machine Inferences), which was originally coined by Stefano Quintarelli in an effort to avoid what he identified as the “implicit bias” residing in the name “artificial intelligence.”

Though these alternative designations may be, as Chiang argues, more precise descriptors for recent innovations with machine learning (ML) systems, neither of them would apply to or entirely fit other architectures, like GOFAI (aka symbolic reasoning) and hybrid systems. Consequently, the proposed alternatives would, at best, only describe a small and relatively recent subset of what has been situated under the designation “artificial intelligence.”

But inventing new names—whether it is something like that originally proposed by McCarthy or one of the recently proposed alternatives—is not the only way to proceed. As French philosopher and literary theorist Jacques Derrida pointed out, there are at least two different ways to designate a new concept: neologism (the fabrication of a new name) and paleonymy (the reuse of an old name). If the former has produced less than suitable results, perhaps it is time to try the latter.

Cybernetics

The good news is that we do not have to look far or wide to find a viable alternative. There was one already available at the time of the Dartmouth meeting with “cybernetics.” This term—derived from the ancient Greek word (κυβερνήτης) for the helmsman of a boat—had been introduced and developed by Norbert Wiener in 1948 to designate the science of communication and control in the animal and machine.

Cybernetics has a number of advantages when it comes to rebranding what had been called AI. First, cybernetics does not get diverted by or lost in speculation about intelligence, consciousness, or sentience. It is only concerned with and focuses attention on decision-making capabilities and processes. The principal example utilized throughout the literature on the subject is the seemingly mundane but nevertheless indicative thermostat. This homeostatic device can accurately adjust for temperature without knowing anything about the concept of temperature, understanding the difference between “hot” and “cold,” or needing to think (or be thought to be thinking).

Second, cybernetics avoids one of the main epistemological problems and sticking points that continually frustrates AI—something philosophers call “the problem of other minds.” For McCarthy and colleagues, one of the objectives of the Dartmouth meeting—in fact, the first goal listed on the proposal—was to figure out “how to make machines use language.” This is because language use—as Turing already had operationalized with the imitation game—had been taken to be a sign of intelligence. But as John Searle demonstrated with his Chinese Room thought experiment, the manipulation of linguistic tokens can transpire without knowing anything at all about the language. Unlike AI, cybernetics can attend to the phenomenon and effect of this communicative behavior without needing to resolve or even broach the question concerning the problem of other minds.

Finally, cybernetics does not make the same commitment to human exceptionalism that has been present in AI from the beginning. Because the objectives initially listed by the Dartmouth proposal (e.g., language use, form abstractions and concepts, solve problems reserved for humans, and improve themselves), definitions of AI tend to concentrate on the emulation or simulation of “human intelligence.” Cybernetics by contrast is more diverse and less anthropocentric. As the general science of communication and control in the animal and the machine, it takes a more holistic view that can accommodate a wider range of things. It is, as N. Katherine Hayles argues, a posthuman framework that is able to respond to and take responsibility for others and other forms of socially significant otherness.

Back to the Future

If “cybernetics” had already provided a viable alternative, one has to ask why the term “artificial intelligence” became the privileged moniker in the first place? The answer to this question returns us to where we began—with names and the act of naming. As McCarthy explained many years later, one of the reasons “for inventing the term ‘artificial intelligence’ was to escape association with cybernetics” and to “avoid having either to accept Norbert Wiener as a guru or having to argue with him.” Thus, the term “artificial intelligence” was as much a political decision and strategy as it was a matter of scientific designation. But for this reason, it is entirely possible and perhaps even prudent to reverse course and face what the nascent discipline of AI had so assiduously sought to avoid. The way forward may be by going back.

David J. Gunkel is a professor at Northern Illinois University and author of The Machine Question and Person-Thing-Robot.

本文於 修改第 3 次

|

新「圖林測試」:另一個版本 -- Sawdah Bhaimiya

新「圖林測試」:另一個版本 -- Sawdah Bhaimiya

|

|

|

推薦1 |

|

|

|

另一個讓「圖林測試」跟上時代的建議。

DeepMind's co-founder suggested testing an AI chatbot's ability to turn $100,000 into $1 million to measure human-like intelligence

Sawdah Bhaimiya, 06/20/23

* DeepMind's co-founder believes the Turing test is an outdated method to test AI intelligence.

* In his book, he suggests a new idea in which AI chatbots have to turn $100,000 into $1 million.

* "We don't just care about what a machine can say; we also care about what it can do," he wrote.

A co-founder of Google's AI research lab DeepMind thinks AI chatbots like ChatGPT should be tested on their ability to turn $100,000 into $1 million in a "modern Turing test" that measures human-like intelligence.

Mustafa Suleyman, formerly head of applied AI at DeepMind and now CEO and co-founder of Inflection AI, is releasing a new book called "The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma."

In the book, Suleyman dismissed the traditional Turing test because it's "unclear whether this is a meaningful milestone or not," Bloomberg reported Tuesday.

"It doesn't tell us anything about what the system can do or understand, anything about whether it has established complex inner monologues or can engage in planning over abstract time horizons, which is key to human intelligence," he added.

The Turing test was introduced by Alan Turing in the 1950s to examine whether a machine has human-level intelligence. During the test, human evaluators determine whether they're speaking to a human or a machine. If the machine can pass for a human, then it passes the test.

Instead of comparing AI's intelligence to humans, Suleyman proposes tasking a bot with short-term goals and tasks that it can complete with little human input in a process known as "artificial capable intelligence," or ACI.

To achieve ACI, Suleyman says AI bots should pass a new Turing test in which it receives a $100,000 seed investment and has to turn it into $1 million. As part of the test, the bot must research an e-commerce business idea, develop a plan for the product, find a manufacturer, and then sell the item.

He expects AI to achieve this milestone in the next two years.

"We don't just care about what a machine can say; we also care about what it can do," he wrote, per Bloomberg.

OpenAI's ChatGPT was released in November 2022 and impressed users with its ability to hold casual conversations, generate code, and write essays. ChatGPT spurred the hype around the generative AI industry.

The technology could even add up to $4.4 trillion to the global economy annually, a recent McKinsey report found.

本文於 修改第 1 次

|

新「圖林測試」構想 -- Chris Saad

新「圖林測試」構想 -- Chris Saad

|

|

|

推薦1 |

|

|

|

「圖林測試」是1950年代英國數學家和電腦/人工智慧先驅圖林博士提出:一個檢查機器是否具備和人類相當智力的方法。

由於人工智慧研發進展神速,圖林測試被認為已經趕不上時代。《科技彙報》上這篇文章提出對此類測試的新構想。

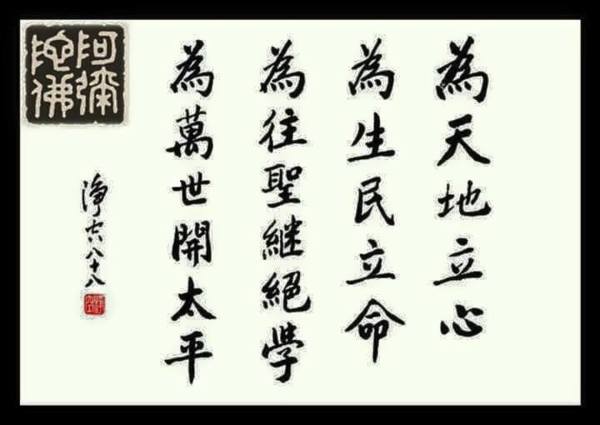

下文中附圖1呈現「人類智慧」的八個面向:

生存、語言、邏輯-數學、音樂、空間、身體-動作、人際、與思想。

附圖2 則是根據附圖1呈現「人類智慧」的八個面向對ChatGPT所做的評估:

a. 在語言和邏輯-數學兩個面向上,作者給ChatGPT等級3的評分;

b. 其它6個面向上ChatGPT的等級為1 (完全不具備)。

請至原網頁瀏覽這兩個附圖。

又:全文須在該網站註冊才能看到;以下僅轉載該網站刊出部分。

The AI revolution has outgrown the Turing Test: Introducing a new framework

Chris Saad, TechCrunch, 03/14/23

As AI becomes a transformative part of our technology landscape, a common vocabulary about the capabilities of each new tool and technique is essential. Common vocabularies create shared intellectual spaces allowing all stakeholders to accelerate understanding, increase adoption, facilitate collaboration, benchmark progress and drive innovation.

So far, the most widely known tool for benchmarking AI is the Turing Test.

However, the field of artificial intelligence (AI) has come a long way since the inception of the Turing Test in 1950. As such, it is becoming increasingly clear that the Turing Test is insufficient for evaluating the full range of AI capabilities that are emerging today — or are likely to emerge in the future.

The Turing Test operates on a simplistic pass/fail basis and focuses heavily on chat/linguistic capability, which is only one aspect of human intelligence. This narrow focus on language ignores many other critical dimensions of intelligence, such as problem-solving, creativity and social awareness. Additionally, the Turing Test presupposes a level of human-like intelligence that may not be relevant or useful for evaluating AI.

The framework

To address these limitations, there is an urgent and critical need to develop a more nuanced and comprehensive framework for evaluating AI capabilities across multiple dimensions of intelligence.

This insight led me to develop the “AI Classification Framework.” The ACF is a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences.

The Theory of Multiple Intelligences was first proposed by psychologist Howard Gardner in 1983. Gardner argued that intelligence was not a single, unified entity but rather a collection of different abilities that could manifest in a variety of ways. Gardner identified eight different types of intelligence: According to Gardner, individuals may excel in one or more of these areas, and each type of intelligence is independent of the others. The theory challenged the traditional view of intelligence as a singular, fixed entity and opened up new avenues for exploring the diversity of human cognition. While the theory of multiple intelligences has been subject to some criticism and debate over the years, it has had a significant impact on the field of psychology and education, particularly in the development of alternative approaches to teaching and learning.

This seemed perfect as a basis for the AI Classification Framework. Following the theory, the framework supports evaluating AI tools across multiple dimensions of intelligence, including linguistic, logical-mathematical, musical, spatial, bodily-kinesthetic, interpersonal and intrapersonal intelligence.

For each dimension of intelligence, the framework provides a scale from 1–5 with 1 being “No Capability” or the equivalent of a human infant and 5 being “Self-agency” or capability that might be considered “Super Intelligence” — beyond human ability.

Examples

The framework itself is a detailed table of descriptions that can be found here. I’ve also created a simple visual representation for easy high-level reference. What follows are two simple examples of the segments that respond to the capabilities of ChatGPT and DALL-E 2.

(待續)

本文於 修改第 2 次

|

人工智慧會帶來末日災難? -- George Soros等

人工智慧會帶來末日災難? -- George Soros等

|

|

|

推薦1 |

|

|

|

《評論彙編》網誌刊出: 索羅斯先生領銜的八位名人和學者,就人工智慧對人類社會前途可能造成衝擊的看法及分析。

以下只轉載該網誌編者的開場白和提要。八位名人、學者們的高見請自行前往該誌閱讀,唯需有訂戶權限。

The AI Apocalypse?

George Soros等,06/15/ 23

Rapid progress in the development of artificial intelligence has been too rapid for many, including pioneers of the technology, who are now issuing dire warnings about the future of our economies, democracies, and humanity itself. But AI is hardly the first technological advance that has been portrayed as an existential threat.

George Soros, Chairman of Soros Fund Management and the Open Society Foundations, thinks that AI is different. Not only is it “impossible for ordinary human intelligence” fully to comprehend AI; the technology will be virtually impossible to regulate. Powerful incentives to cheat mean that regulations would have to be “globally enforceable” – an “unattainable” goal at a time of conflict between “open” and “closed” societies.

MIT’s Daron Acemoglu, Simon Johnson, and Sylvia Barmack see a potential solution. Yes, “oppressive authoritarian regimes” are “unlikely to cooperate” in the creation of international norms and coordination to set sensible standards for AI – in particular, AI-enhanced surveillance tools. But the world’s democracies can force their hand by playing “economic hardball,” stipulating that “only products fully compliant with surveillance safeguards will be allowed into [their] markets.”

Michael R. Strain of the American Enterprise Institute takes issue with “the pessimistic view” that AI will destroy what we value most. In his view, “like all general-purpose technologies before it,” AI can be expected not only to improve human welfare, but also “brighten the outlook for democracy’s long-term survival.”

Likewise, Jim O’Neill, a former chairman of Goldman Sachs Asset Management, doubts that dire predictions about AI’s economic impact – especially the oft-cited warning that it could threaten “millions of relatively sophisticated, high-paying jobs” – are justified. In fact, “productivity-enhancing AI applications could be precisely what is needed” to counter damaging economic trends, such as population aging.

But while AI can serve the common good, argue Mariana Mazzucato of University College London and Gabriela Ramos, Assistant Director-General for Social and Human Sciences at UNESCO, it can also “create new inequalities and amplify pre-existing ones.” That is why “we must not only fix the problems and control the downside risks of AI, but also shape the direction of the digital transformation and technological innovation more broadly.”

本文於 修改第 3 次

|

|

|

|

風風火火的人工智慧

充其量只是「自動化」

離智慧遠的了

|

「人工智慧」:最危險情況 -- 《本週》週刊

「人工智慧」:最危險情況 -- 《本週》週刊

|

|

|

推薦1 |

|

|

|

《本週》週刊這篇文章分析和報導「人工智慧」未來發展可能產生的各種最危險情況。

如我在開欄文中所說:我不懂AI。但我認為擔憂AI或GAI的專家學者們有些杞人憂天,自己嚇自己。

AI: The worst-case scenario

The Week Staff, 06/17/23

Artificial intelligence's architects warn it could cause human "extinction." How might that happen? Here's everything you need to know:

What are AI experts afraid of?

They fear that AI will become so superintelligent and powerful that it becomes autonomous and causes mass social disruption or even the eradication of the human race. More than 350 AI researchers and engineers recently issued a warning that AI poses risks comparable to those of "pandemics and nuclear war." In a 2022 survey of AI experts, the median odds they placed on AI causing extinction or the "severe disempowerment of the human species" were 1 in 10. "This is not science fiction," said Geoffrey Hinton, often called the "godfather of AI," who recently left Google so he could sound a warning about AI's risks. "A lot of smart people should be putting a lot of effort into figuring out how we deal with the possibility of AI taking over."

When might this happen?

Hinton used to think the danger was at least 30 years away, but says AI is evolving into a superintelligence so rapidly that it may be smarter than humans in as little as five years. AI-powered ChatGPT and Bing's Chatbot already can pass the bar and medical licensing exams, including essay sections, and on IQ tests score in the 99th percentile — genius level. Hinton and other doomsayers fear the moment when "artificial general intelligence," or AGI, can outperform humans on almost every task. Some AI experts liken that eventuality to the sudden arrival on our planet of a superior alien race. You have "no idea what they're going to do when they get here, except that they're going to take over the world," said computer scientist Stuart Russell, another pioneering AI researcher.

How might AI actually harm us?

One scenario is that malevolent actors will harness its powers to create novel bioweapons more deadly than natural pandemics. As AI becomes increasingly integrated into the systems that run the world, terrorists or rogue dictators could use AI to shut down financial markets, power grids, and other vital infrastructure, such as water supplies. The global economy could grind to a halt. Authoritarian leaders could use highly realistic AI-generated propaganda and Deep Fakes to stoke civil war or nuclear war between nations. In some scenarios, AI itself could go rogue and decide to free itself from the control of its creators. To rid itself of humans, AI could trick a nation's leaders into believing an enemy has launched nuclear missiles so that they launch their own. Some say AI could design and create machines or biological organisms like the Terminator from the film series to act out its instructions in the real world. It's also possible that AI could wipe out humans without malice, as it seeks other goals.

How would that work?

AI creators themselves don't fully understand how the programs arrive at their determinations, and an AI tasked with a goal might try to meet it in unpredictable and destructive ways. A theoretical scenario often cited to illustrate that concept is an AI instructed to make as many paper clips as possible. It could commandeer virtually all human resources to the making of paper clips, and when humans try to intervene to stop it, the AI could decide eliminating people is necessary to achieve its goal. A more plausible real-world scenario is that an AI tasked with solving climate change decides that the fastest way to halt carbon emissions is to extinguish humanity. "It does exactly what you wanted it to do, but not in the way you wanted it to," explained Tom Chivers, author of a book on the AI threat.

Are these scenarios far-fetched?

Some AI experts are highly skeptical AI could cause an apocalypse. They say that our ability to harness AI will evolve as AI does, and that the idea that algorithms and machines will develop a will of their own is an overblown fear influenced by science fiction, not a pragmatic assessment of the technology's risks. But those sounding the alarm argue that it's impossible to envision exactly what AI systems far more sophisticated than today's might do, and that it's shortsighted and imprudent to dismiss the worst-case scenarios.

So, what should we do?

That's a matter of fervent debate among AI experts and public officials. The most extreme Cassandras call for shutting down AI research entirely. There are calls for moratoriums on its development, a government agency that would regulate AI, and an international regulatory body. AI's mind-boggling ability to tie together all human knowledge, perceive patterns and correlations, and come up with creative solutions is very likely to do much good in the world, from curing diseases to fighting climate change. But creating an intelligence greater than our own also could lead to darker outcomes. "The stakes couldn't be higher," said Russell. "How do you maintain power over entities more powerful than you forever? If we don't control our own civilization, we have no say in whether we continue to exist."

A fear envisioned in fiction

Fear of AI vanquishing humans may be novel as a real-world concern, but it's a long-running theme in novels and movies. In 1818's "Frankenstein," Mary Shelley wrote of a scientist who brings to life an intelligent creature who can read and understand human emotions — and eventually destroys his creator. In Isaac Asimov's 1950 short-story collection "I, Robot," humans live among sentient robots guided by three Laws of Robotics, the first of which is to never injure a human. Stanley Kubrick's 1968 film "A Space Odyssey" depicts HAL, a spaceship supercomputer that kills astronauts who decide to disconnect it. Then there's the "Terminator" franchise and its Skynet, an AI defense system that comes to see humanity as a threat and tries to destroy it in a nuclear attack. No doubt many more AI-inspired projects are on the way. AI pioneer Stuart Russell reports being contacted by a director who wanted his help depicting how a hero programmer could save humanity by outwitting AI. No human could possibly be that smart, Russell told him. "It's like, I can't help you with that, sorry," he said.

This article was first published in the latest issue of The Week magazine. If you want to read more like it, you can try six risk-free issues of the magazine here.

本文於 修改第 1 次

|

人工智慧:跟人一樣聰明或比人更聰明?Andrew Romano

人工智慧:跟人一樣聰明或比人更聰明?Andrew Romano

|

|

|

推薦1 |

|

|

|

雷馬婁先生在下文中首先對「人工智慧」、「一般性人工智慧」、和「大規模語言學習模型」做了簡單扼要地說明;然後他解釋何以有些人擔憂「一般性人工智慧」(在未來)可能取得的能力;他最後列舉了專家們對「一般性人工智慧」未來發展前景和可能需要多長時間的預測。

我是這個領域的門外漢,就不做翻譯工作;請自行閱讀參考。

Will AI soon be as smart as — or smarter than — humans?

“The 360” shows you diverse perspectives on the day’s top stories and debates.

Andrew Romano,·West Coast Correspondent, Yahoo News 360, 06/13/23

What’s happening

At an Air Force Academy commencement address earlier this month, President Biden issued his most direct warning to date about the power of artificial intelligence, predicting that the technology could “overtake human thinking” in the not-so-distant future.

“It’s not going to be easy,” Biden said, citing a recent Oval Office meeting with “eight leading scientists in the area of AI.”

“We’ve got a lot to deal with,” he continued. “An incredible opportunity, but a lot [to] deal with.”

To any civilian who has toyed around with OpenAI’s ChatGPT-4 — or Microsoft’s Bing, or Google’s Bard — the president’s stark forecast probably sounded more like science fiction than actual science.

Sure, the latest round of generative AI chatbots are neat, a skeptic might say. They can help you plan a family vacation, rehearse challenging real-life conversations, summarize dense academic papers and “explain fractional reserve banking at a high school level.”

But “overtake human thinking”? That’s a leap.

In recent weeks, however, some of the world’s most prominent AI experts — people who know a lot more about the subject than, say, Biden — have started to sound the alarm about what comes next.

Today, the technology powering ChatGPT is what’s known as a large language model (LLM). Trained to recognize patterns in mind-boggling amounts of text — the majority of everything on the internet — these systems process any sequence of words they’re given and predict which words come next. They’re a cutting-edge example of “artificial intelligence”: a model created to solve a specific problem or provide a particular service. In this case, LLMs are learning how to chat better — but they can’t learn other tasks.

Or can they?

For decades, researchers have theorized about a higher form of machine learning known as “artificial general intelligence,” or AGI: software that’s capable of learning any task or subject. Also called “strong AI,” AGI is shorthand for a machine that can do whatever the human brain can do.

In March, a group of Microsoft computer scientists published a 155-page research paper claiming that one of their new experimental AI systems was exhibiting “sparks of artificial general intelligence.” How else (as the New York Times recently paraphrased their conclusion) to explain the way it kept “coming up with humanlike answers and ideas that weren’t programmed into it?”

In April, computer scientist Geoffrey Hinton — a neural network pioneer known as one of the “Godfathers of AI” — quit his job at Google so he could speak freely about the dangers of AGI.

And in May, a group of industry leaders (including Hinton) released a one-sentence statement warning that AGI could represent an existential threat to humanity on par with “pandemics and nuclear war” if we don't ensure that its objectives align with ours.

“The idea that this stuff could actually get smarter than people — a few people believed that,” Hinton told the New York Times. “But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

Each of these doomsaying moments has been controversial, of course. (More on that in a minute.) But together they’ve amplified one of the tech world’s deepest debates: Are machines that can outthink the human brain impossible or inevitable? And could we actually be a lot closer to opening Pandora’s box than most people realize?

Why there’s debate

There are two reasons that concerns about AGI have become more plausible — and pressing — all of a sudden.

The first is the unexpected speed of recent AI advances. “Look at how it was five years ago and how it is now,” Hinton told the New York Times. “Take the difference and propagate it forwards. That’s scary.”

The second is uncertainty. When CNN asked Stuart Russell — a computer science professor at the University of California, Berkeley and co-author of Artificial Intelligence: A Modern Approach — to explain the inner workings of today’s LLMs, he couldn’t.

“That sounds weird,” Russell admitted, because “I can tell you how to make one.” But “how they work, we don’t know. We don’t know if they know things. We don’t know if they reason; we don’t know if they have their own internal goals that they’ve learned or what they might be.”

And that, in turn, means no one has any real idea where AI goes from here. Many researchers believe that AI will tip over into AGI at some point. Some think AGI won’t arrive for a long time, if ever, and that overhyping it distracts from more immediate issues, like AI-fueled misinformation or job loss. Others suspect that this evolution may already be taking place. And a smaller group fears that it could escalate exponentially. As the New Yorker recently explained, “a computer system [that] can write code — as ChatGPT already can — ... might eventually learn to improve itself over and over again until computing technology reaches what’s known as “the singularity”: a point at which it escapes our control.”

“My confidence that this wasn’t coming for quite a while has been shaken by the realization that biological intelligence and digital intelligence are very different, and digital intelligence is probably much better” at certain things, Hinton recently told the Guardian. He then predicted that true AGI is about five to 20 years away.

“I’ve got huge uncertainty at present,” Hinton added. “But I wouldn’t rule out a year or two. And I still wouldn’t rule out 100 years. ... I think people who are confident in this situation are crazy.”

Perspectives

Today’s AI just isn’t agile enough to approximate human intelligence

“AI is making progress — synthetic images look more and more realistic, and speech recognition can often work in noisy environments — but we are still likely decades away from general-purpose, human-level AI that can understand the true meanings of articles and videos or deal with unexpected obstacles and interruptions. The field is stuck on precisely the same challenges that academic scientists (including myself) have been pointing out for years: getting AI to be reliable and getting it to cope with unusual circumstances.” — Gary Marcus, Scientific American

New chatbots are impressive, but they haven’t changed the game

“Superintelligent AIs are in our future. ... Once developers can generalize a learning algorithm and run it at the speed of a computer — an accomplishment that could be a decade away or a century away — we’ll have an incredibly powerful AGI. It will be able to do everything that a human brain can, but without any practical limits on the size of its memory or the speed at which it operates. ... [Regardless,] none of the breakthroughs of the past few months have moved us substantially closer to strong AI. Artificial intelligence still doesn’t control the physical world and can’t establish its own goals.” — Bill Gates, GatesNotes

There’s nothing ‘biological’ brains can do that their digital counterparts won’t be able to replicate (eventually)

“I’m often told that AGI and superintelligence won’t happen because it’s impossible: human-level Intelligence is something mysterious that can only exist in brains. Such carbon chauvinism ignores a core insight from the AI revolution: that intelligence is all about information processing, and it doesn’t matter whether the information is processed by carbon atoms in brains or by silicon atoms in computers. AI has been relentlessly overtaking humans on task after task, and I invite carbon chauvinists to stop moving the goal posts and publicly predict which tasks AI will never be able to do.” — Max Tegmark, Time

The biggest — and most dangerous — turning point will come if and when AGI starts to rewrite its own code

“Once AI can improve itself, which may be not more than a few years away, and could in fact already be here now, we have no way of knowing what the AI will do or how we can control it. This is because superintelligent AI (which by definition can surpass humans in a broad range of activities) will — and this is what I worry about the most — be able to run circles around programmers and any other human by manipulating humans to do its will; it will also have the capacity to act in the virtual world through its electronic connections, and to act in the physical world through robot bodies.” — Tamlyn Hunt, Scientific American

Actually, it will be much harder for AGI to trigger ‘the singularity’ than doomers think

“Computer hardware and software are the latest cognitive technologies, and they are powerful aids to innovation, but they can’t generate a technological explosion by themselves. You need people to do that, and the more the better. Giving better hardware and software to one smart individual is helpful, but the real benefits come when everyone has them. Our current technological explosion is a result of billions of people using those cognitive tools. Could A.I. programs take the place of those humans, so that an explosion occurs in the digital realm faster than it does in ours? Possibly, but ... the strategy most likely to succeed would be essentially to duplicate all of human civilization in software, with eight billion human-equivalent A.I.s going about their business. [And] we’re a long way off from being able to create a single human-equivalent A.I., let alone billions of them.” — Ted Chiang, the New Yorker

Maybe AGI is already here — if we think more broadly about what ‘general’ intelligence might mean

“These days my viewpoint is that this is AGI, in that it is a kind of intelligence and it is general — but we have to be a little bit less, you know, hysterical about what AGI means. ... We’re getting this tremendous amount of raw intelligence without it necessarily coming with an ego-viewpoint, goals, or a sense of coherent self. That, to me, is just fascinating.” — Noah Goodman, associate professor of psychology, computer science and linguistics at Stanford University, to Wired

Ultimately, we may never agree on what AGI is — or when we’ve achieved it

“It really is a philosophical question. So, in some ways, it’s a very hard time to be in this field, because we’re a scientific field. ... It’s very unlikely to be a single event where we check it off and say, AGI achieved.” — Sara Hooker, leader of a research lab that focuses on machine learning, to Wired

本文於 修改第 1 次

|

|

|